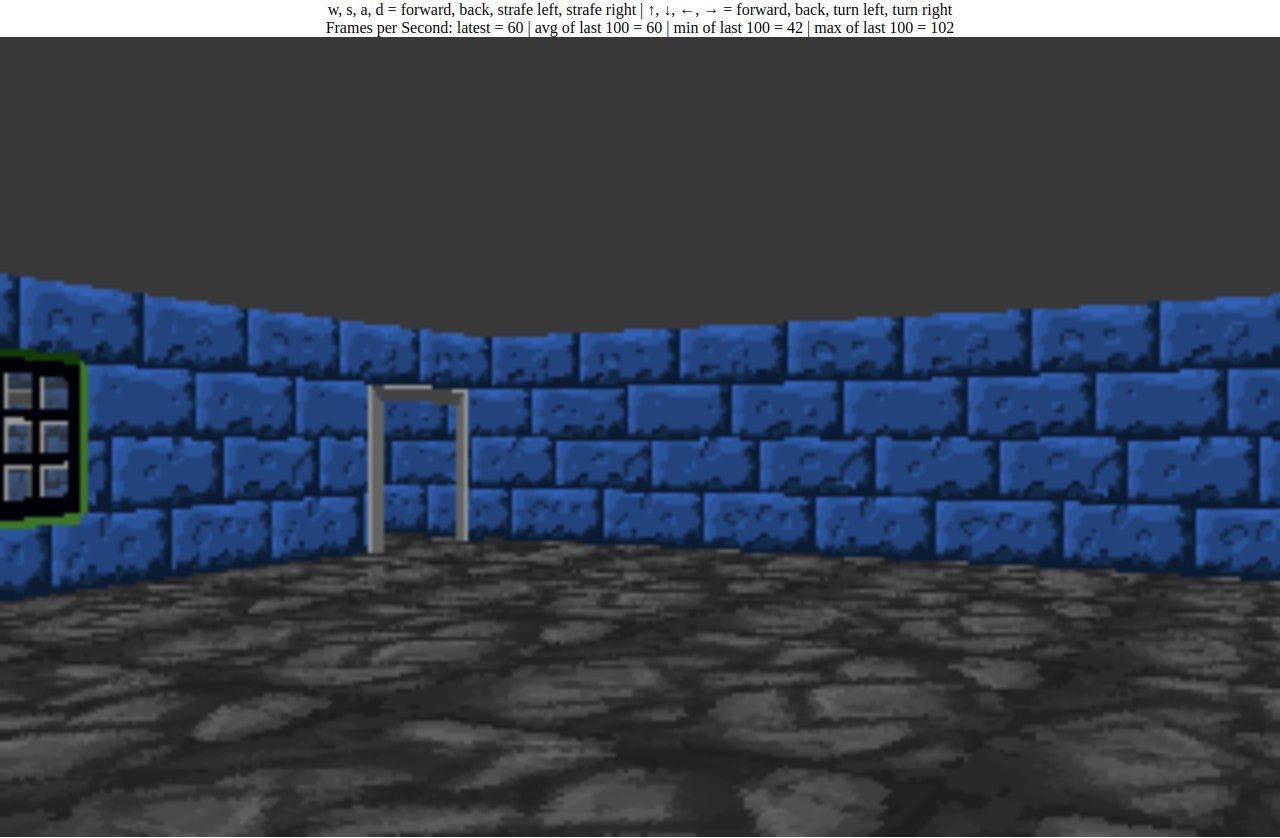

A Floor Beneath Your Feet

66 Days Until I Can Walk

A lot got done today. And now that I’m in the process of writing up my blog post, I’m starting to realise just how much. It’s been a good day in many, many ways. Obviously the big success is that floor texturing now works.

However, in addition to that, I also got some virtual joysticks working for the mobile version of the site. I need to do some proper checking for how far a stick is pushed so that I can adjust turn and movement speed accordingly, but at least now people on mobile can look around the scene a little bit. There’s a major refactoring of the codebase coming soon, and this will likely be one of the issues addressed.

I have also fixed my user input code to handle different keyboard layouts. This was based on some feedback I received in relation to the project on Reddit. You can read about specifically what I did further down this post.

Floor Texturing

In order to implement floor texturing, I looked again at F. Permadi’s tutorials. Casting rays for floors is, in some ways, a good deal simpler than casting for walls. Permadi himself mentions in the comments of his code that his implementation is very easy to optimise. I’ll talk about some of the ways in which I have done this later. For now, let’s talk about how floor texturing works.

Taking a single column of the screen as an example, after we have finished rendering a wall, we then want to render the floor starting from the bottom of the wall and continuing to the bottom of the projection plane. In order to do this, we cast a ray out from the eye of the player, through the pixel on the projection plane that we are currently trying to texture with a floor tile, and then out into the world. I will give a detailed explanation of how this works in a future post, but a good description is given by Permadi on the page linked above.

We follow the ray until it hits a floor tile. We then compute the point of intersection between the ray and the tile and map that to a texture. We select a single pixel from that texture at the appropriate point and render it to the screen.

So unlike rendering the walls, where we fire a single ray out for a column, get the points of intersection with each grid line, and then draw the appropriate texture scaled to a height based on the distance from the player, with floor textures we must fire a ray for each individual pixel that we want to render to. This is is because, of course, floor tiles can be skewed depending on which direction we look at them from.

There are two simple optimisations that can be applied to Permadi’s implementation. It’s likely you can already guess what I am going to propose:

- Convert all floating point arithmentic to fixed point arithmetic

- Use lookup tables to store values that can be precomputed

Converting everything to FPA is trivially simple, of course and has already been implemented.

Generating lookup tables is also quite straightforward. The ray that is fired will be of constant length for each column/row combination of the projection plane. This means that we can compute the length of the ray, and its skew (exactly the same problem that cause the fisheye effect) at compile time and save ourselves some runtime cycles. I hope to add this tomorrow. Because we are only projecting from the bottom half of the projection plane, the size of the lookup table should be PROJECTION_PLANE_WIDTH * PROJECTION_PLANE_HEIGHT / .

WASD Key-Bindings In JavaScript (And Beyond)

Yesterday I posted a little project update on the /r/rust subreddit, just to try and keep people engaged with what I’m doing here. One piece of feedback I recieved was in relation to how I am handling user input in JavaScript. In my js file, I have a callback registered for key-up and key-down events. The callback updates a global dictionary of key states so that an entry for a key is true when down and false when up. This is how I can let the player strafe and turn simultaneously, for example.

The implementation looked something like the following:

let keystate = {}

document.addEventListener('keydown', (event) => { keystate[event.key] = true; }, false);

document.addEventListener('keyup', (event) => { keystate[event.key] = false; }, false);

function events() {

if (keystate['w']) { player_forward(); }

if (keystate['s']) { player_back(); }

if (keystate['a']) { player_strafe_left(); }

if (keystate['d']) { player_strafe_right(); }

if (keystate['ArrowLeft']) { player_turn_left(); }

if (keystate['ArrowRight']) { player_turn_right(); }

}The problem here is that event.key is tied to the value of the key being pressed, but not its physical location. What this means is that, while WASD bindings work fine on my QWERY keyboard, people with Dvorak or French AZERTY keyboards do not benefit from the same “gamepad” layout of these keys. The solution, as given by stefnotch is to use event.code, which is tied to the physical location of a key, rather than its value.

Comment

by u/stefnotch from discussion Learning Rust Until I Can Walk Again (Update)

in rust

This advice, of course, does not only apply to JavaScript. For example, if implementing a game with SDL2, then WASD keybindings should be implemented using SDL_Scancode and not SDL_Keycode

Updating my JavaScript code is trivially simple

let keystate = {}

// use event.code instead of event.key in keyup/keydown callbacks

document.addEventListener('keydown', (event) => { keystate[event.code] = true; }, false);

document.addEventListener('keyup', (event) => { keystate[event.code] = false; }, false);

function events() {

// use codes when checking key state

if (keystate['KeyW']) { player_forward(); }

if (keystate['KeyS']) { player_back(); }

if (keystate['KeyA']) { player_strafe_left(); }

if (keystate['KeyD']) { player_strafe_right(); }

if (keystate['ArrowLeft']) { player_turn_left(); }

if (keystate['ArrowRight']) { player_turn_right(); }

}Adding Virtual Joysticks

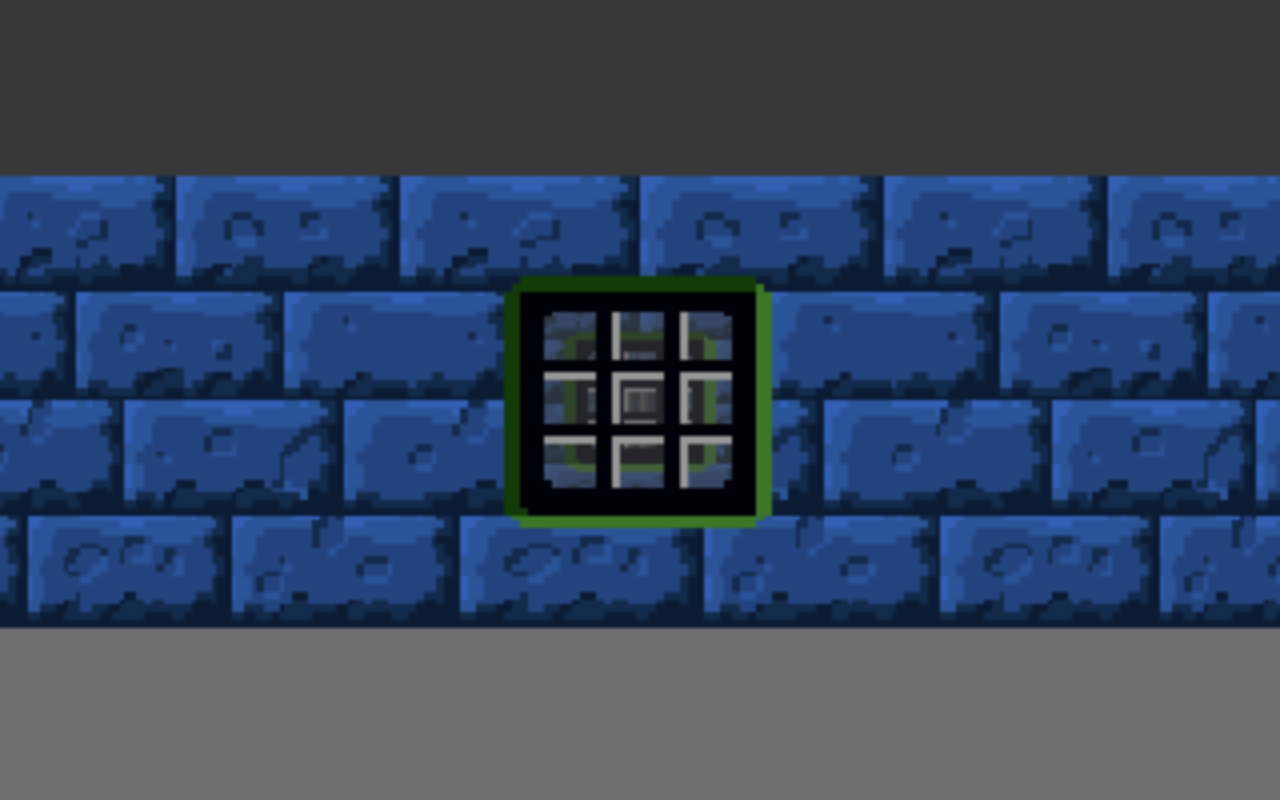

Up to this point, users who have logged into the site to check out the engine on a mobile device have not had any way to move about. They are faced with what is essentially a static render of a view through a window.

I wanted to give mobile users some way to interact with the site, so this morning I added two virtual joysticks to the page. These joysticks will only render on mobile devices. In order to do this I made use of the JoyStick2 JavaScript library by Roberto D’Amico. This gave me a trivially simply way to insert and style two joysticks on the engine page.

The callbacks for the joysticks return the displacement of the stick, and its cardinal direction (N, NE, E, SE, S, SW, W, NW). For now I have just used the cardinal direction to determine what direction the user is trying to move in. So the user experience on mobile is still not great. I’ll need to add some control where the speed of the player varies with the displacement of the stick. But for now, at least mobile users have a bit more interactivity than before.

Figuring out whether or not I was on mobile was trickier than I expected. There is a CSS based solution where you check the maximum resolution of the screen in order to determine whether or not you are on a mobile device. However, this did not work for me and in the end I opted for the JavaScript solution shown below:

window.mobileAndTabletCheck = function() {

let check = false;

(function(a){if(/(android|bb\d+|meego).+mobile|avantgo|bada\/|blackberry|blazer|compal|elaine|fennec|hiptop|iemobile|ip(hone|od)|iris|kindle|lge |maemo|midp|mmp|mobile.+firefox|netfront|opera m(ob|in)i|palm( os)?|phone|p(ixi|re)\/|plucker|pocket|psp|series(4|6)0|symbian|treo|up\.(browser|link)|vodafone|wap|windows ce|xda|xiino|android|ipad|playbook|silk/i.test(a)||/1207|6310|6590|3gso|4thp|50[1-6]i|770s|802s|a wa|abac|ac(er|oo|s\-)|ai(ko|rn)|al(av|ca|co)|amoi|an(ex|ny|yw)|aptu|ar(ch|go)|as(te|us)|attw|au(di|\-m|r |s )|avan|be(ck|ll|nq)|bi(lb|rd)|bl(ac|az)|br(e|v)w|bumb|bw\-(n|u)|c55\/|capi|ccwa|cdm\-|cell|chtm|cldc|cmd\-|co(mp|nd)|craw|da(it|ll|ng)|dbte|dc\-s|devi|dica|dmob|do(c|p)o|ds(12|\-d)|el(49|ai)|em(l2|ul)|er(ic|k0)|esl8|ez([4-7]0|os|wa|ze)|fetc|fly(\-|_)|g1 u|g560|gene|gf\-5|g\-mo|go(\.w|od)|gr(ad|un)|haie|hcit|hd\-(m|p|t)|hei\-|hi(pt|ta)|hp( i|ip)|hs\-c|ht(c(\-| |_|a|g|p|s|t)|tp)|hu(aw|tc)|i\-(20|go|ma)|i230|iac( |\-|\/)|ibro|idea|ig01|ikom|im1k|inno|ipaq|iris|ja(t|v)a|jbro|jemu|jigs|kddi|keji|kgt( |\/)|klon|kpt |kwc\-|kyo(c|k)|le(no|xi)|lg( g|\/(k|l|u)|50|54|\-[a-w])|libw|lynx|m1\-w|m3ga|m50\/|ma(te|ui|xo)|mc(01|21|ca)|m\-cr|me(rc|ri)|mi(o8|oa|ts)|mmef|mo(01|02|bi|de|do|t(\-| |o|v)|zz)|mt(50|p1|v )|mwbp|mywa|n10[0-2]|n20[2-3]|n30(0|2)|n50(0|2|5)|n7(0(0|1)|10)|ne((c|m)\-|on|tf|wf|wg|wt)|nok(6|i)|nzph|o2im|op(ti|wv)|oran|owg1|p800|pan(a|d|t)|pdxg|pg(13|\-([1-8]|c))|phil|pire|pl(ay|uc)|pn\-2|po(ck|rt|se)|prox|psio|pt\-g|qa\-a|qc(07|12|21|32|60|\-[2-7]|i\-)|qtek|r380|r600|raks|rim9|ro(ve|zo)|s55\/|sa(ge|ma|mm|ms|ny|va)|sc(01|h\-|oo|p\-)|sdk\/|se(c(\-|0|1)|47|mc|nd|ri)|sgh\-|shar|sie(\-|m)|sk\-0|sl(45|id)|sm(al|ar|b3|it|t5)|so(ft|ny)|sp(01|h\-|v\-|v )|sy(01|mb)|t2(18|50)|t6(00|10|18)|ta(gt|lk)|tcl\-|tdg\-|tel(i|m)|tim\-|t\-mo|to(pl|sh)|ts(70|m\-|m3|m5)|tx\-9|up(\.b|g1|si)|utst|v400|v750|veri|vi(rg|te)|vk(40|5[0-3]|\-v)|vm40|voda|vulc|vx(52|53|60|61|70|80|81|83|85|98)|w3c(\-| )|webc|whit|wi(g |nc|nw)|wmlb|wonu|x700|yas\-|your|zeto|zte\-/i.test(a.substr(0,4))) check = true;})(navigator.userAgent||navigator.vendor||window.opera);

return check;

};I found this in a StackOverflow post by Michael Zaporozhets. It basically uses a massive regular expression to check what browser is being used to access the website. If it is determined to be a mobile version of a browser, then the mobile version of the site is rendered.

Conclusion

So that just about wraps things up for today. Tomorrow I will get texturing working for the ceiling too. Then I will spend the rest of the week performing a massive refactor of my code in an effort to make everything just a little more Rust, and a lot less messy.